Deep Dive Into Containers (Part 1)

What are containers?

Containers have been here for a while; usage of containers has increased in the past few years. So what is a container, how it works, and what’s unique about it?

Containers from the perspective of a Layman.

A container is any receptacle or enclosure for holding a product used in storage, packaging, and shipping. Things kept inside of a container are protected by being inside of its structure.

- Wikipedia

But this is not quite what we are talking about here.

Containers from the perspective of a Tech professional.

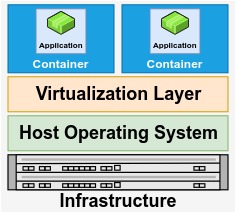

A container is an enclosure that holds your application or software that serves a purpose, to be concise, it’s a OS-level virtualization. Containers run within a host will have its own isolated process tree and resources. More often, a container will be packaged to execute a single task that can run independently, and that’s an embodiment when it comes to the microservice Single responsibility principle architecture.

A container is similar to a Virtual machine, but instead of using a hypervisor, it takes advantage of the host machine’s kernel. Containers do not virtualize an entire OS, Because of this container can start quite fast with fewer resources. It uses host machine kernel features to isolate process and resources.

The shipping of container from development to production is secure and easy due to this architecture. When a container is built, every dependency will be packaged in it to run the application; because of this, it changed the way we develop and maintain applications. It helps to build a good relationship between the developers and operations team and has added a great advantage to the software development life cycle.

Evolution of Containers

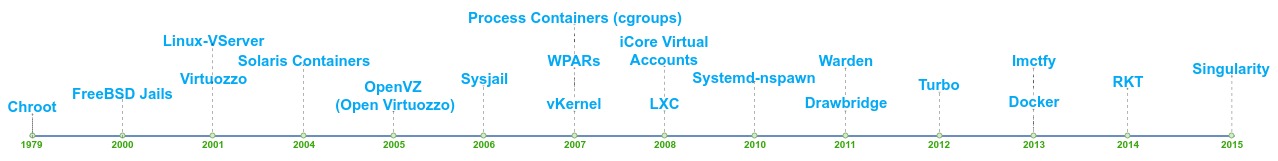

It’s a surprise that it all started way back in 1979. Unix version 7 introduced chroot, an initial representation of isolation similar to containerization.

Chroot is an isolated environment, which separates the running process and its children from the primary system. Chroot environment limits the enclosed process and children to access the resources outside of the container such as files, memory space, devices. It provides a new root path from a directory on your filesystem. Changing root is usually performed for system maintenance, Privilege separation, and Testing and development.

Sysjail is an obsolete user-land virtualizer for systems supporting the systrace library - as of version 1.0 limited to OpenBSD, NetBSD, and MirOS. Its original design was inspired by FreeBSD jail. The project was officially discontinued in 2009 due to flaws inherent to syscall wrapper-based security architectures.

Same year AIX (Advanced Interactive eXecutive) from IBM that is based on a version of UNIX introduces a new tool for virtualization Workload Partitions (WPARs). AIX WPARs are software partitions that are created from and share the resources of, a single instance of the AIX operating system. Each created WPAR has its private execution environment with its filesystems and network addresses. They support two types of WPAR system workload partitions, and application workload partitions, A system WPAR behaves as a complete installation of AIX and Application WPARs are lightweight environments used for isolating and executing a single application process.

Google launched a technology in 2008 known as Process Containers (Cgroups) Process Containers later rename to cgroups. It helps for limiting and measuring the total resources (CPU, memory, block I/O, network) used by a group of processes running on a system, later cgroups merged into Linux Kernel.

Following year a few more projects released. iCore Software released iCore Virtual Accounts for Microsoft Windows XP in the year 2008. It lets the user create multiple virtual “accounts” (virtual machines) that can be easily created or deleted without affecting each other’s state or the state of the core operating system.

The systemd-nspawn was another project that released in 2010 for Linux which was similar to the chroot command, but more powerful than chroot since it fully virtualizes the file system hierarchy, as well as the process tree, the various IPC subsystems, and the host and domain name. There is no specific image formats. We can point nspawn to an OS root files and start a Container based on it.

The OS giant Microsoft announced Drawbridge in 2011 which was a research prototype of a new form of virtualization for application sandboxing. Drawbridge combines two core technologies: First, a pico process, which is a process-based isolation container with a minimal kernel API surface. Second, a library OS, which is a version of Windows enlightened to run efficiently within a pico process.

lmctfy (Let Me Container That For You) was initially released in 2013 as an open-source version of Google’s container stack technology, providing containerization for Linux applications. lmctfy containers allow for the isolation of resources used by multiple applications running on a single machine and gives the applications the impression of running exclusively on a device. The applications may be container-aware and thus be able to create and manage their sub-containers.

lmctfy was designed and implemented with specific use-cases and configurations in mind and may not work out of the box for all use-cases and configurations. lmctfy is currently stalled, in the year 2015 Google has decided to contribute core lmctfy concepts and abstractions to libcontainer.

Libcontainer allows you to manage the lifecycle of the container performing additional operations after the container is created. Later libcontainer merged with Runc which is a client wrapper around the libcontainer library project, Runc is one implementation of the OCI runtime specification by Linux Foundation Collaborative Project.

Docker is so popular because it helps to start a containerized application with minimal effort using the command line interface, API, and GUI. Docker helps in the process toward migrating to microservice, but the docker itself is a monolith, this created an overhead over the application relaying on docker, the result was a redesign of the entire docker project to Microservice architecture.

Docker support for an image to initialize container was another added advantage over other technologies. Docker images can be shared with the community. Docker used Union File System, which is layered over one another to build the docker image. The AUFS(union file system) storage driver was previously the default storage driver used for managing images and layers, If your Linux kernel is version 4.0 or higher, and you use Docker CE you can use OverlayFS which is a modern union filesystem that is similar to AUFS, but faster and with a simpler implementation.

Rkt features a pod-native approach, a pluggable execution environment, and a well-defined surface area that makes it ideal for integration with other systems` CoreOS initiatives lead to the formation of the Open Container Initiative (OCI). Container Network Interface (CNI) which was a part of their pluggable architecture later formed a standard for some of the container network plugin. Rkt’s growth was fast it as able to achieve some market share of Docker.

Singularity workflow: Two steps for building the Container, create the image and bootstrap the Container using the definition file, and we can start the Container. A singularity container can be built in the same way as a Docker container is build, but the main difference is we can start the Container and add dependency and necessary files to it and create the container image. A user inside a Singularity container is the same user as outside the Container.

Virtual Machine (VM) vs. Containers

What is Hypervisor?

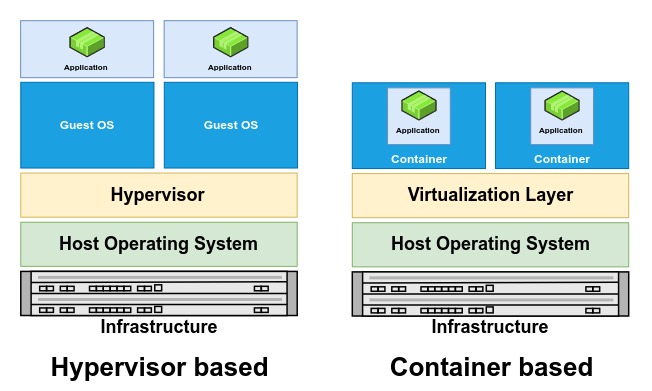

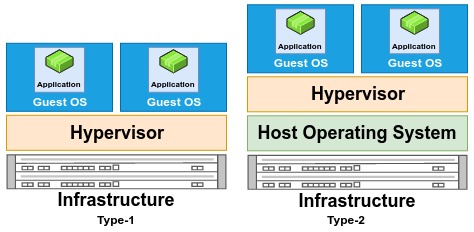

Hypervisor-based virtualization is a kind of isolation that works on the Hardware level. Hypervisors are software that can be run on the hardware directly or on a host operating system. The isolated environment created on top of the Hypervisor is called a virtual machine (VM); their implementations may involve specialized hardware, software, or a combination.

The hypervisor takes care of the provisioning of the resources like CPU, Disk, Network, etc. to virtual machines. VM running on the same physical hardware is logically separated from each other.

Classification of hypervisors

Hypervisor software runs directly on top of the hardware to manage the guest’s virtual machine are called bare-metal hypervisors (Type-1). Xen, Oracle VM Server for SPARC, Microsoft Hyper-V, and VMware ESX/ESXi are examples of Type-1 hypervisors.

The hypervisor software run on top of the operating system (OS) is called hosted hypervisors (Type-2). Type-2 hypervisors abstract guest operating systems from the host operating system. VMware Workstation, VirtualBox, Parallels Desktop for Mac and QEMU are examples of type-2 hypervisors.

I’ have planned to write about Container as a series of posts. In the subsequent posts, we will look into Container Internals, Orchestration, Advantages and disadvantages of containers, the Future of containers, the Latest container technologies like Podman and NVIDIA GPU-Accelerated Containers…..

I would greatly appreciate it if you kindly give me some feedback and suggestions, So let’s learn together!

References

This is a post in the containers-deep-dive series. Stay tuned for more posts in this series.